Introduction

An ongoing debate is the epistemological stakes of computational methods in humanistic inquiry. What kind of evidence is a word embedding or face detection and what can it tell us? How do we account for nuances across cultural, temporal, and geographical frames when engaging in pattern recognition and identifying outliers? To what degree does the analysis of words and images through computational methods such as topic modeling and object detection reduce and capture the complexity of meaning-making in media such as literature and film? These questions animate a range of fields, garnering attention from popular higher education news outlets like The Chronicle of Higher Education, and they are also the subject of entire books (Wasielewski; Underwood; Arnold and Tilton, Distant Viewing).

Disciplines in the humanities have forged paths toward engaging with computational evidence that at times have been in parallel, intersected, and moved in opposite directions. Among the most well-lit roads, the discipline of literary studies has grappled with fears of a return to formalism, a search for form and structure that describes the elements of a work without accounting for cultural and historical contingencies. Combine formal components with the mathematical logic of computational methods and one risks a double formula of numerical and structural elements. Perhaps no area of literary studies has had more oncoming traffic than computational literary studies, where full-fledged dismissals of computational methods have consumed significant energy. We turn onto another road to look at the debates over formalism and form, addressing the possibilities and limits of computational evidence in humanities inquiry.

Drawing on conversations at the Dartmouth Computational Formalism Workshop in April 2023, we take scholar Dennis Yi Tenen’s statement that “formalism is different than the search for forms” as a point of departure. We turn our gaze toward an expanding area of computational humanities focused on visual AI. Rather than center on text and words, this area expands the gaze to address questions that animate fields such as film, TV, and comics studies. Navigating through analysis and theory from visual culture and media studies, we discuss how we can appropriate and design computer vision in unexpected ways that do not devolve into empty formalism and elide context. More particularly, in this essay we draw on prior research in this area conducted by the two current authors, together, separately, and with other collaborators. We take this published and forthcoming research, along with examples from unpublished work, and consider it through the lens of questions about computation, form, and history.

Through our discussion of this prior work, we show how it is possible to engage in creative mapping of humanities concepts to the computational results rather than simply relying on their out-of-the-box labels. We think of this as a type of “misuse,” even “incorrect,” in the most literal understanding of these computational algorithms; yet this creative space is what makes it possible to rework the models’ modes of viewing and outputs into analytical frames and results that animate the humanities. More specifically, we revisit this work to emphasize how formal computational analysis of basic structures of visual media can incorporate history and context by mapping historically situated concepts onto computationally formal elements. To demonstrate our point, we will briefly discuss how we have used forms to study three different visual mediums: photographs, television, and comic strips—and how computer vision enables new ways of understanding culturally situated approaches to making art, to telling stories, and to seeing visual mediums anew.

The first case study, revisiting work originally written by Taylor Arnold, Lauren Tilton, and Annie Berke, turns to U.S. TV sitcoms. Facial recognition algorithms were combined with methods for identifying shot lengths to analyze characterization across two major female-led sitcoms from the 1970s, created amidst the U.S. civil rights and feminist movements Here, we revisit this past scholarship to consider questions of form that were elided in the initial article. More specifically, we show how the results of this article’s formal analysis do not obscure, but rather illuminate the gendered dynamics that define these television programs’ historical context. The second case study also involves an unexpected use of facial detection algorithms. Arnold, Tilton, and Wigard previously conducted research applying these algorithms to historical photography archives. Innovations in face detection and recognition are driven by technologies for automated machinery such as self-driving cars and surveillance such as CCTV. This research instead reappropriated these methods to consider the amount of visual space that faces occupy in the frame, which became a computational proxy for identifying photographic genres. In this current article, we expand on this past research to consider how mapping the results onto concepts such as portraiture and the exploration of visual tropes offers key cultural-historical context. The third case study discusses research by Arnold, Tilton, and Wigard, which combined a custom algorithm with manual annotation methods to detect a fundamental formal feature in U.S. syndicated comic strips: the panel. This previous research, which focused on comics by Charles Schulz, is discussed alongside forthcoming research by Wigard about comics from Lynn Johnston, as well as unpublished, original research from Wigard concerning the work of Morrie Turner. In this current article, we turn to these collected case studies to again demonstrate how against-the-grain uses of computer vision, and especially algorithmic glitches, provide historical and cultural insight through the formal aesthetics of media and challenge critiques of computational formalism as removing context. Our aim, overall, is to argue that formal analysis through computational methods provides a kind of evidence that can support the kind of aesthetic, social, and historical relations and nuances that animate humanistic inquiry.

Television: Computing Context through Characters

Art historian Amanda Wasielewski joins media studies scholar Miriam Posner, historian Jessica Marie Johnson, and ethnic studies scholar Roopika Risam when she aptly states that we must consider “that maybe there is no way to completely zoom out and get an accurate picture of the whole … no matter how much data around extant work is amassed, we will never have the full picture” (60). She situates this challenge in the context of computational methods’ epistemological tendency toward computational formalism, “a revival of formalist methods … facilitated by digital computing” (32). While Wasielewski is primarily concerned with the intersection of computational formalism and art history, her concern is well taken and shared by a range of fields that look cautiously at computational and digital humanities as well as cultural analytics. Key here is Wasielewski’s observation that computational formalism “analyzes the external features/qualities of the work, often to the exclusion of contextual factors” (32).

Keeping Wasielewski’s well-founded trepidations with computational formalism in mind, we turn to an example of work with sitcoms where historically situated concepts were mapped onto computationally formal elements. Our point draws on an article that Taylor Arnold, Lauren Tilton, and Annie Berke previously published in this same journal in 2019. Arnold, Tilton, and Berke looked at onscreen trends through face detection and recognition. Those formal elements were then mapped onto features of the show, specifically characters, to explore how visual style sends cultural and social messages through sitcoms. Arnold, Tilton, and Berke were not searching for forms as the final output of their findings. Rather, they argued that searching computationally for certain forms like who is on screen and for how long, or shot pacing plus on-screen characters, becomes a way to understand the social and cultural messages about women, feminism, and domestic life in the 1970s United States through TV.

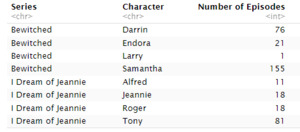

The study focused on the Network era of American television history (late 1950s to the 1980s), with a particular emphasis on the situational comedy, or “sitcom,” through a comparative analysis of two classic ones: Bewitched and I Dream of Jeannie (Arnold, Tilton, and Berke). For brief context, both shows featured small, all-white cast members, formulaic storylines for each episode that now map onto the sitcom genre format, and light supernatural elements centered around each show’s female protagonist. Bewitched featured Samantha, a witch played by Elizabeth Montgomery, and I Dream of Jeannie focused on Jeannie, a genie played by Barbara Eden. Bewitched aired from 1964 to 1972, while I Dream of Jeannie ran from 1965 through 1970, each proving successful enough to remain in syndication for decades after their final episodes. Even though both Bewitched and I Dream of Jeannie were popular network sitcoms that aired from the mid 1960s to the early 1970s, they feature notably different approaches to onscreen representation of their female protagonists. Jeannie, an actual wish-granting genie, is often scantily clad in exoticized garb of pinks, greens, and blues that stand out against the military outfits of most of the male characters—an Orientalist image among Cold War paranoia. Samantha, a domestic and suburban witch married to human Darrin Stephens (played by Dick York initially and later Dick Sargent), often wears modern dresses of the day throughout the eight seasons of Bewitched, even occasionally repeating outfits due to the show’s limited budget (James). Her wardrobe may have been uninspiring, but her creativity with magic inspired a large viewership and adoring fans.

Arnold, Tilton, and Berke’s original scope was focused on explaining the structure and outcomes of their computational methods. Here, we instead turn to some of the contexts to which these analyses, understood as formal analyses, can be related. Both sitcoms and their respective protagonists received significant popular acclaim during their initial airings; in subsequent decades, critical attention has been paid to each show’s content, aesthetic, and cultural cache. For instance, scholars have delved into I Dream of Jeannie’s on-screen Orientalism (Abraham; Bullock, “Orientalism on Television”), its depiction of gender norms and roles (Humphreys; Kornfield), domesticity (Humphreys), and sexuality (Reilly). Likewise, Bewitched has been analyzed for similarly overlapping themes, including gender roles (Stoddard) and domesticity (McCann) along with additional studies of queerness (Miller; Drushel) and its depiction of witches (Baughman et al.; Weir). Here, in this article, we ask, how do these historically and culturally situated themes map onto formal aesthetics in these two shows through elements like onscreen presence, film shots, and more? We seek to consider how the semiotics of time-based media, which is amenable to computational approaches through distant viewing, can then be mapped onto established theory about how camera angles, lens focus, and composition communicate social relations and power structures.

Structured information can provide insights into key formal aspects of television, including production or editing style as well as implicit biases into on-screen gendered representation. Rather than searching for a unifying form to either Bewitched or I Dream of Jeannie, Arnold, Tilton, and Berke focus on how “face detection and recognition algorithms, applied to frames extracted from a corpus of moving images, can capture formal elements present in media beyond shot length and average color measurements” (2).[1] The distinction between face detection and recognition becomes important. Though the former is a computational way of viewing that does not care about the particularities of the character, the latter allows us to encode important context. Through these methods, Arnold, Tilton, and Berke can now show who is on screen and who isn’t. While both shows are named after and ostensibly feature young women, face recognition revealed significant discrepancies regarding who gets to be represented on screen, and how. As the work on the sitcoms shows, rather than simply finding a general “face,” face recognition creates space to train the algorithms with specific context that animates our areas of interest. Tracking forms is not limited to formalism, but it can be used to link to historical and social contexts.

Arnold, Tilton, and Berke illuminate the visual style of both television shows through shot boundary algorithms that addressed formal element from film studies. Their approach was to measure, as they put it, “the extent to which two successive shots are different from one another in terms of the general color palette and the distribution of brightness over the frame” (6). Successfully detecting the presence or absence of faces and bodies and then combining with shot information could lead to more finely tuned computational approaches, such as recognizing when certain characters are on screen.

Formal elements pertaining to both Samantha and Jeannie revealed trends in onscreen visual representation beyond fashion and gender-based roles. Applying face detection algorithms to a corpus of moving images that contain each episode of both Bewitched and I Dream of Jeannie enabled them to see the average length of time for which each primary character is on screen. Arnold, Tilton, and Berke show how this approach to computational formalism revealed that Samantha and Darrin, the male lead character, “share the lead with an average of 7 minutes of screen time per episode,” while Jeannie is “seen for less than an average of 4 minutes per episode,” and Tony is on screen for “just under 11 minutes per episode” (18). In revisiting this previously published project through questions of computation, form, and context, we can now see that this study speaks to Wasielewski’s concern that computational formalism will often focus simply on comparative methodologies without larger contextual considerations. Here, we revisit evidence from the original paper by Arnold, Tilton, and Berke with a new formalist perspective, drawing together and emphasizing elements in order to highlight how Jeannie’s low onscreen representation aligns with the show’s narrative context: that Jeannie is an actual genie granting wishes to her love interest, Captain Tony Nelson (played by Larry Hagman), with much of each episode’s plot revolving around Tony having to grapple with and clean up the effects of Jeannie’s magic. By contrast, Samantha and Darrin’s shared, equal onscreen representation suggests “not only that their marriage lies at the heart of the show but that theirs is an equitable and egalitarian coupling” (18). In this way, we can see different historical representations of gender norms play out in computational, quantifiable evidence.

In another example, we might here build off Arnold, Tilton, and Berke’s work in order to look at how often each primary character appears in the opening shot at the start of an episode. These first shots are significant in establishing connection between the audience, the onscreen actors, and their characters.

Our newly composed Figure 1 helps clarify the ways in which gendered representation is entrenched in these opening shots. Samantha appears in the opening shot for the vast majority of the show’s episodes, while Jeannie is rarely seen in these opening shots compared to Tony. This further demonstrates that within Jeannie’s own show, the show’s narrative more often begins with Tony, while Samantha’s actions and story drive the introductory scenes of Bewitched.

These and other findings related to shot classification provide key insights into the visual style of two Network-era sitcoms as well as methods for reading necessary contextual elements into methods of computational formalism. This study by Arnold, Tilton, and Berke also allows for confirming quantitatively important interventions while deepening our understanding of these two television shows. Combining algorithms, such as shot boundary algorithms, with face detection allowed for finding multiple kinds of shot editing and framing: group shots with multiple characters; two-shots with just two characters; close-up shots focused on just one character; over-the-shoulder shots with two characters, one facing the camera and one away from the camera. Face recognition provided information about exactly who is on screen and where they are on screen. Arnold, Tilton, and Wigard are now working on a larger corpus of sitcoms and layering additional approaches. Combining shot type (shot boundary detection), with who is on screen (face detection and recognition), where they are on screen (custom calculations), and their body language (pose detection) opens a more nuanced analysis of social and power relations.

Photographs: Computing Context through Genre

Looking at hundreds, much less thousands, of photographs is challenging. Keeping all the elements together is why methods for visual content analysis from different disciplines abound (Van Leeuwen and Jewitt; Kress and Van Leeuwen; Araujo et al.). The process of creating a spreadsheet manually and tagging and classifying elements of images has given way to automated processes facilitated by computer vision and the commitment of institutions to digitization and open access (e.g., see Smithsonian Open Access; Library of Congress’s open access books and digitization efforts; the Rijksmuseum open data initiative, RijksData; and Arnold, Ayers, Madron, et al.). Melvin Wevers and Thomas Smits, for instance, illustrate both the potential for and difficulty of using convolutional neural networks (CNNs) to separate photographs from illustrations within the pages of historical newspapers. Their method, as they note, entails classifying images based on the images’ “abstract visual elements,” training CNNs to place images into categories of content such as buildings, cartoons, maps, schematics, and sheet music based on visual similarities or differences.[2] Similar efforts include Matthew Lincoln, Julia Corrin, Emily Davis, and Scott B. Weingart using computer vision to help generate metadata tags for over 20,000 images from the Carnegie Mellon University Archives General Photograph Collection; the Illustrated Image Analytics project at North Carolina State University’s experimentation with the study of historical illustrations in Victorian newspapers (Fyfe and Ge); the Photogrammar project that explores over 170,000 historical photographs from the FSA and OWI agencies of the U.S. Federal Government from 1935 to 1943 (Arnold, Ayers, Madron, et al.); notably too, the Yale Digital Humanities Laboratory’s collaborative study with the Getty Research Institute on the Ed Ruscha archive of over 500,000 files, including photographs and negatives, associated with the Los Angeles Sunset Strip (Di Lenardo et al.).

Studying formal and compositional features such as the presence of a face and the amount of visual space that faces occupy on the image offers a computational proxy for classifying photographs as genres like studio photography. Genre offers important context for understanding visual culture. We turn to a collection of photographs from the late nineteenth century and early twentieth century by revisiting previous work by Arnold, Tilton, and Wigard in which they apply automatic processing and classification of the George Grantham Bain Collection at the Library of Congress, which has been digitized for public viewing and use. We now aim to demonstrate how computational formalism here manifests in multiple ways.[3] According to the Library of Congress:

The George Grantham Bain Collection represents the photographic files of one of America’s earliest news picture agencies. The collection richly documents sports events, theater, celebrities, crime, strikes, disasters, political activities including the woman suffrage campaign, conventions and public celebrations. The photographs Bain produced and gathered for distribution through his news service were worldwide in their coverage, but there was a special emphasis on life in New York City. The bulk of the collection dates from the 1900s to the mid-1920s, but scattered images can be found as early as the 1860s and as late as the 1930s. (“About the George Grantham Bain Collection”)

As the Library of Congress notes, the bulk of the photographs contain Bain’s own news documentation. Yet, while the broad contents of the Bain collection are known and can be visually seen or understood as pertaining to the topics noted above, much of the collection arrived without accompanying documentation or explanatory descriptions. We can use computational formal analysis to better understand the contents of the collection and produce new categories, subsets, and trends within the collection not covered or addressed by what documentation does exist. Wevers, Nico Vriend, and Alexander de Bruin explore similar questions of unknowability in historical archives, applying transfer learning and computer vision, particularly emphasizing scene detection in their study of photography collections (Wevers et al.). To this end, we emphasize new aspects to past research findings from Arnold and Tilton, as well as Arnold, Tilton, and Wigard on the Bain collection that used face detection algorithms to learn more about the human subjects within Bain’s photographic corpus. We do so to offer a different perspective grounded in formalism with the potential to also uncover new formal elements that might go otherwise unnoticed across the over 30,000 photographs.

In prior research by Arnold and Tilton, face detection allowed for quickly sorting the collection into two major categories: those with human subjects and those without. Arnold and Tilton demonstrated that applying face detection algorithms reveals a high proportion of photographs with one person detected (nearly 20,000 photographs) and substantially lower proportions of photographs with multiple subjects or with no subjects (Arnold and Tilton, “ADDI: Methods Paper” 16). They were interested not just in whether computer vision can detect faces and bodies, but what proportion those people take up within the frame itself. This kind of proportionality is significant: who is the subject of this photograph? Who comes to the fore and who is relegated to the background?

In a talk titled “Data Trouble” given at University of Richmond on Wednesday, November 15, 2023, Miriam Posner pushed at these ideas through a lively discussion of what happens when we consider the possibility that “anything can be expressed as data.” She offered several principles of working through the datafication of humanities corpora and particularly visual media: demarcate the data; parameterization; ontological stability; replicability; boundedness; and deracination. Computer vision also helps work through Posner’s considerations of parameterization. As Posner prompts, “How can you measure one data point against another? As one example, we know that time doesn’t always operate the same for everyone.” Referring back to Arnold, Tilton, and Wigard’s results from their paper on automatic identification and classification of historical photographs, we can now observe that algorithmic processing will not necessarily give us information about who the subjects are, but their posing, backdrops, and costuming do offer parameter-based opportunities to compare these photographs against one another as data points. This, in turn, enables a different kind of demarcation beyond the initial construction of the dataset: one subset of photographs can be demarcated into studio portraiture based on their formal aspects and another into activity photographs. Computational methods offer a strategy for mapping onto the genres that animate categorization and analysis of twentieth-century photography.

More specifically, face detection algorithms cannot just find whether people are featured as the subject of Bain’s photography but are able to count how many people occupy a given photograph along with the proportion that they take up in front of the photographer’s camera. As one example, Arnold and Tilton demonstrated a trend in photographs with only one subject: two groups of photographs, the first where the subject takes up roughly 50% of the image and another subset where the subjects only occupy about 20% of the image (Arnold and Tilton, “ADDI: Data Analysis Paper” 4).

Those images with a person occupying the majority of the frame are most commonly studio portraits: profile photographs of a singular subject gazing at or just beyond the camera lens, most often photographed against a dark or neutral background. This particular trend can be seen in Figure 2, which features a screenshot of the online ADDI Visualizer tool originally created by Arnold and Tilton. The visualizer has not only annotated the face of the subject in a portrait but brought up photographs that the annotator reads as similar based on visual similarity. Note here that all these particular photos are of female-presenting people in studio portrait-style photographs, each with lighter skin and darker hair against primarily neutral backgrounds. Conversely, the images where a human body occupies a minority of the image are more often activity photographs, where a singular person is engaging in some performance or act including dancing, sports, or in the case of some of the political photographs, engaging in protest.

Random sampling of these two smaller datasets reveals more historically minded insights and questions. Building on another article on the subject, Arnold, Tilton, and Wigard found that the Bain Collection’s studio portrait subjects “are primarily men and many appear to be white” (Arnold, Tilton, and Wigard, “Automatic Identification” 32). This revealed that there are “potential patterns about how Bain visually defined news through the centering of people,” and through centering particular groups of people—in this case, white men. However, Posner’s comments about the boundedness of datasets encourage us to revisit this past piece and reframe that thinking with computational formalism in mind. Rather than turning other aspects of their identity into formal categories algorithmically, this is where we should turn to further archival research and biographical information about the subjects. Rather than searching for the unified form to one historical news agency’s approach to documenting life at the turn of the twentieth century, we use those previously published findings to posit that computational formalism can open up new questions about photo elements such as subject, composition, and themes in early twentieth century approaches to news documentation in photography.

Computational formalism can support insights into the Bain Collection, but at the same time it cannot answer every question nor provide knowledge into all aspects of the corpus. Much of the Bain Collection was lost in a fire, so there will always be gaps in understanding more of the full scope of the Bain News Service. Likewise, algorithmic annotators can find faces, poses, and even portrait directions, but these still lead to more questions about the Bain collection, and the early twentieth century more broadly. Who are the people within these photographs, and why did the Bain News Service choose these subjects over others? What does the periodic focus on studio portraiture tell us about the function, goals, and evolution of an early twentieth century news agency helmed by Bain? What we can do though is map computational analysis onto formal and contextual elements such as genre, and draw on the histories of photography, to label and embed historical context in computational analysis. To further explore how contextual factors and elements can be mapped on to computational formalism, we turn to an illustrative medium, rather than a televisual or photographic one: comic strips.

Comics: Computing Context Through Panels

The third case study that we address focuses on comic strips, which bring together interesting challenges for formalism and the study of forms. We begin by working through previously published research for this journal by Arnold, Tilton, and Wigard that applied computational methods to the entirety of the classic mid-twentieth century American comic strip Peanuts by Charles Schulz (Arnold, Tilton, and Wigard, “Understanding Peanuts”). We revisit this scholarship here to thoroughly unpack what explorations of comics formalism can show about panel detection and how text detection algorithms see comic features. Next, we move to addressing forthcoming research by Wigard. This forthcoming research uses a combination of computational methods and archival intervention to historicize the role of panels, a key narrative element, in popular newspaper comic strips.

Studying illustrative media with computational methods can be a complicated affair and particularly working to find formal elements in media such as comic strips or graphic novels. Comics creator and theorist Scott McCloud, print scholar Jared Gardner, and historian RC Harvey argue that what marks comics as a distinctive medium is their sequential use of panels and text. Because existing OCR methods and approaches excel at finding text and its positioning within comics, Arnold, Tilton, and Wigard’s previously published work on using computational methods for studying comics began with finding arguably the most basic and defining formal component to the comic strip: the panel.

Panels establish scenes, stories, pacing, and settings, even lengths of time. They help determine reading order and focal points, inviting the reader to participate in meaning-making by imagining what happens between two panels. On average, daily U.S. newspaper comic strips are made of either three and four panels due to newspaper layout spacing, while certain Sunday comic strips run on average between eight and twelve panels due to an expanded layout space. Newspaper cartoonists present a particularly interesting formalist case study because they work on these comic strips daily, which corresponds to close to 350 comic strips every year, often for decades. Thus, to study comic strip panels is to study them historically and often at scale. Yet, this same opportunity presents a challenge: how does one examine forty or fifty years of comic strip images? Previously, Arnold, Tilton, and Wigard showed how computational formalism offers in-roads to beginning to understand such illustrative media, and that computational methods can provide insights into how cartoonists use key formal elements like panels across their entire career.

In “Understanding Peanuts and Schulzian Symmetry,” Arnold, Tilton, and Wigard explained that their first approach was to use automated processing to find visual elements of the comics. More specifically, they turned to automated detection to try to overcome the sheer scale of comic strips. Traditionally, comic strips are studied through a combination of literary close reading, historical analyses, and cultural studies, with explorations of the balance and tension between visual, textual, and narrative components. To find panels, Arnold, Tilton, and Wigard (2023) developed an algorithm that scans pixels in a digital comic strip file to find unbroken black lines surrounded by white space, essentially “creating a path of adjacent pixels that are also all white. This set of reachable pixels can be associated with the background of the comic.” The algorithm then records the line’s vertices until there are x- and y-coordinates for each corner of the panel, before moving on through the rest of the comic strip image. Once this image has been completely scanned, the detector moves on to the next comic strip image in sequence. The panel detector was combined with a caption detection algorithm based on bounding boxes to find the center of each bounding box, which can be used to determine which panels have captions and in what sequence.

Thinking through the boundaries of Arnold, Tilton, and Wigard’s research into the entire corpus of Peanuts, we here take Wasielewski’s claim to heart, that “maybe there is no way to completely zoom out and get an accurate picture of the whole” (60). Through the process of distant viewing, choices are made about what visual elements to include and exclude to prioritize the detection of key formal components like the panel and the placement of captions. Or, as considered through the work of semioticians like Lévi-Strauss, Dyer, and Chandler, interpretive decisions must be considered regarding the encoding and decoding of visual comics signs for data collection.

In this work on Peanuts, Arnold, Tilton, and Wigard made intentional choices to ignore Schulz’s signature when possible. Ordinarily, a signature is a key element to any comic strip in that it acts as a visual record of artistic ownership and craftsmanship. This is particularly significant in comic strips when multiple authors can work on the comic strip throughout its lifetime, whether named authors or those working under pseudonyms, though Schulz famously was the only cartoonist to work on Peanuts. As Arnold, Tilton, and Wigard argued in the article’s section on “Peanuts: Caption Detection,” however, the presence of the signature acted as a kind of visual hiccup or static for purposes of detecting captions. If what scholars are interested in are those textual insertions within word bubbles and exclamations, then they needed to work to filter out text that appears elsewhere within the comic strip. This means, intentionally writing the text detection algorithm to ignore captions that appear in the bottom third of the comic strip when possible.

Further, through an exploration of another contemporary American comic strip, Lynn Johnston’s For Better or For Worse, a forthcoming study by Wigard reveals how computational methods can only bring us so far before comics formalism begins to resist algorithmic detection (Wigard, forthcoming). For Better or For Worse, a critically and commercially successful strip about a suburban family in Canada, ran from 1979 to 2008, providing another excellent case study of scale. In this study, Wigard used the panel detection algorithm initially created for Arnold, Tilton, and Wigard’s article on Peanuts,[4] which worked successfully with the first twenty years’ of For Better or For Worse comic strip images (Wigard, forthcoming). Wigard initially used the panel detection algorithm on a corpus of Johnston’s For Better or For Worse comic strips with particular focus on the differences between daily panels and Sunday panels. This created datasets that showed panel frequency and averages for daily and weekly comic strips. However, the algorithm struggled with panel detection after 2001 due to Johnston’s extensive usage of nonstandard panels without clearly defined black borders that used open-ended “bleeds” to blur the line between the interior and exterior of the panel (Cohn et al.; Corcoran; McCloud). Wigard then turned to archival records of original art to better understand how Johnston used such nonstandard panels, historically situating these trends within the larger corpus of her work. Such archival interventions confirm that Johnston, in fact, periodically used these open-ended panel bleeds throughout her career.

Within Wigard’s individual study, Johnston’s early comics were often partial bleeds that Arnold, Tilton, and Wigard’s panel detector was able to handle; later in the comic strip’s publishing history, these panel bleeds and nonstandard borders are more frequent and pronounced (Wigard forthcoming). This provides opportunities to zero in on earlier instances of this formalistic quality in a long-running comic strip, as well as prompt more questions about the nature of cartoonists’ formal aesthetics evolving throughout their career. The attempt to computationally detect formalism can show shifts in comics’ formal evolution, even moments of comics’ resistance to digital methods of seeing, and prompt reconsiderations of how to refine algorithms to better grapple with illustrative media.

At the same time, it is crucial to keep Posner’s enlightening comments about boundedness in mind here: “We can’t know everything: Data has a way of reifying itself. We don’t have a way of discussing absences or gaps in datasets.” Wigard’s study adds to past research by complicating what it means to engage with a comics data corpus fully, to see the wholeness of an entire published comic strip, and thinking productively about the glitches that emerge in the process of addressing wholeness, absences, and/or gaps in datasets. These gaps in computational comics datasets provide opportunities for us to fill in the gutter in an act of McCloudian closure: the imaginative act of stitching together the multimodal narrative of text and image between panels, or in this case, crucially connecting contextual factors to computational formalism (McCloud 63).

While Arnold, Tilton, and Wigard’s previous study on Peanuts showed successful applications of computational methods for studies of comic strips, Wigard’s research on For Better or For Worse shows that when we use computation to search for formal elements, glitches can arise, and these glitches prompt us to dig deeper for contextual understanding. We also think here of the work by Legacy Russell on the liberatory potential of glitches, of connecting glitches not as schisms in our comic strips dataset, but necessary connections to the cartoonists at the heart of this case study, to their lived experiences and artistic styles (Russell 7). Likewise, we would be remiss not to acknowledge the glitch art, theory, and studies work accomplished by Rosa Menkman, particularly her call to view “bends and breaks as metaphors for différance” and to “Use the glitch as an exoskeleton for progress” (346). In essence, then, Wigard’s study of Lynn Johnston’s comic not only reveals more about her particular visual aesthetic, but prompts considerations of other so-called glitches in the corpus of For Better or For Worse: caption detection errors and/or misattributions that arise from Johnston’s uniquely expressive lettering which often breaks panel borders.

Here, we turn to new and previously unpublished work on another cartoonist, Morrie Turner, to consider what glitches can further reveal about comics formalism. An award-winning Black cartoonist, Turner is the creator of the long-running syndicated comic strip Wee Pals (1965–2014), which follows the daily antics of a racially, ethnically, and culturally diverse cast of kids. The origins and content of Wee Pals are interesting as Turner was inspired to create the comic strip to reflect his own childhood growing up in Oakland, California, surrounded by children from all backgrounds. But the formal elements of Turner’s strip can provide further insight into computational formalism due to Turner’s nonstandard aesthetic. Rather than using rote, square, or rigid panels, Turner frequently deployed asymmetrical panels, circular frames, organic shapes, and quite often borderless panels: all shapes that very clearly disrupt our automatic panel detector. While Johnston’s panel usage offered early in-roads to thinking about functional glitches in comics datasets, Turner’s formal elements seem to actively resist this particular approach to computational formalism, so much so that we turn to additional methods of counting and compiling data on Wee Pals.

Manual annotators like the Multimodal Markup Editor allow for careful annotation of visual, textual, and narrative elements within multimodal files, particularly comics. Developed by Rita Hartel for the Hybrid Narrativity Project, the visual editor allows users to load in image files, then use premade tools to annotate, or mark up, that file. Users can click on an element in the image they want to annotate (say, a panel), then mark out its boundaries with a selection tool, , add descriptive metadata or details to the annotation, and export the data into an XML file. The tool allows for the annotation of several elements, such as panels, subpanels, word balloons, captions, characters, onomatopoeia, diegetic text, and objects. The editor thus affords markup of not just panels and word balloons, but additional annotated layers that can lay over top of one another as well, as with characters, onomatopoeia, and objects. Further, the tool affords separation of both text and panels into different aspects for more nuanced study. Whereas the earlier automatic approach used in both Wigard’s forthcoming research and Arnold, Tilton, and Wigard’s previously published scholarship was primarily concerned with a) detection of text, and b) placement of text, the Multimodal Markup Editor has functionality to annotate word balloons (i.e., speech bubbles coming from characters), captions (i.e., text existing outside of the time and space of the comic), and diegetic text (i.e., text about the world of the comic). The editor also allows for annotation of standard panels and layers for subpanels, those within an existing or larger panel. While such manual annotators are necessarily slower than computational methods, that same slower pace encourages us to consider the nonstandard formal traits of cartoonists like Turner anew through digital means. Russell reminds us that the glitch is often seen as an error, but this error leads to new possibilities of thinking through what it means to glitch and to be a glitch:

Glitches are difficult to name and nearly impossible to identify until that instant when they reveal themselves: an accident triggering some form of chaos … but errors are fantastic in this way, as often they skirt control, being difficult to replicate … errors bring new movement into static space. (73–75)

Here, Russell is primarily thinking about the interconnectedness of bodies and technology. The idea of the glitch in conjunction with manual annotation provides new insight into the historicity of comics formalism. But we think here about the ways in which glitches are occurring in the comics form itself, where the very literal ink of the comics form is representative of embodied experiences and aesthetic choices.

In some ways, this aligns with Ernesto Priego and Peter Wilkins’ argument that the array of panels in a comics grid, whether comic strip or graphic novel page, “can be understood as a specific technology,” particularly when mediated digitally or when read at scale (1). They continue by explaining to readers that panels and the comics grid are largely invisible to the reader, akin to a theater stage, until the reading experience is disrupted in some fashion. In such instances, Priego and Wilkins note that the more standardized, “consistent, steady, indifferent” a comics grid is, the more technologically it has been used and that the comics grid features more aesthetics when it has been altered functionally or artistically (5).

If we think of the comics form as a kind of technology, then these disruptions operate like glitches. While the perceived glitchiness of Johnston’s work prompted consideration of additional cartoonists, the use of computational methods to identify Turner’s own formal glitchiness can bring new insight to the study of comics formalism. His disruption of this particular approach to computational formalism is indeed glitchy, but it is also an aesthetic feature. Wigard is in the process of using the Multimodal Markup Editor to manually annotate Turner’s work, as well as the work of other newspaper cartoonists who use nonstandard panels and formalist elements like Bill Watterson, in order to generate a new corpus of comics data. Figure 3, for instance, highlights the ways that even the Multimodal Markup Editor’s automatic detection of panels struggles with correctly labeling formal elements in Wee Pals. This preprint comic strip, dated October 22, 1968, clearly represents multiple formal traits mentioned above, where word balloons break panels that are often nonstandard shapes. The figure further showcases our efforts to correctly label these panels and other diegetic elements like characters, word balloons, and paratext, with bounding boxes using the Editor’s layering function.

Ultimately, computational formalism in comics provides in-roads to understanding often subtle trends that occur across decades of publication within the context of a medium that is built on scale. While such algorithmic annotators can find commonalities owing to the oft-standardized form of the newspaper comic strip, the use of these annotators also requires contextual understanding, including the consideration of historical, cultural, biographical, and other elements. Taking comics formalism seriously (e.g., attending to panels, captions, and other formal features) and addressing it computationally offers opportunities to see where patterns hold and where they fail or fall apart. It is when the search for formalism falls apart that we see shifts in form needing to be situated, contextualized, and explored. Computational formalism, then, invites us to pay closer attention to subtle shifts in form and change that crucially require contextualization.

Conclusion: From Out-of-the-Box to Against-the-Grain

Harnessing inherited algorithms out-of-the-box offers possibilities and challenges. Their training data, ways of viewing, and final outputs do not always align one to one with intended areas of inquiry or interpretation. We often find ourselves aligning the results of computational analyses to a formal concept or visual feature that the tools were not built or imagined to be used to uncover. Rather than framing these algorithms’ generalizability as formalism, we could instead think of their generalness as opening an interpretative space for the kinds of inquiry that animate the humanities. There are limits to the use of these methods. Much of computer vision is built with a presentist mindset, in large part due to the training sets of neural nets and Multimodal Large Language Models (MLLMs). It often assumes the visual universality of a concept or thing, or at least hopes that the deep manipulations in the neural network or now MLLM can account for the differences in what the “same” object looked like a hundred years ago as today. It is unclear, but exciting, to see what the future holds in the age of generative AI. In the meantime, thinking creatively with existing algorithms—while designing one’s own—offers ways to think productively and differently about computation.

These three case studies demonstrate how analyzing alongside and against algorithms, and through their glitches, can incorporate history and context by mapping historically situated concepts on computationally formal elements. Television holds the potential for connecting gender disparities with shot length, face recognition, and on-screen presence. Photography archives and collections prompt us to consider how the search for bodies and poses can overcome a lack of identifying features and metadata in large visual corpora. Of these three case studies, comic strips may be the most telling example of how formalism, the study of forms, and context may be more entangled and mutually informed. When readers just look, they may ignore glitches and moments where the form changes, dismissed as outliers. But the search for fundamental elements like comic strip panels fall apart at scale, over and repeatedly; the present authors are invited to ask, why? The barrage of numerical failures that computational formalism can produce may be the seeds of why computing formal elements at scale is not easily classified as formalism or easily contained by formalism theory. Rather, formalism can be encoded but the decoding at scale may exceed the theoretical limits in which our algorithm was encoded. In other words, we may sometimes want to perform formalist analysis, but the algorithm that makes that possible may produce an excess of data and challenge our very efforts. It’s this excess and failure that has often been a site of insights for us, of understanding that brings in context or at least begs to ask new questions.

Looking to different roads, we want to end by turning our gaze to the history of history, rather than English and literary studies, for a future path. The field of history learned the hard lessons of cliometrics and is now moving to focus on hermeneutics (Da; Jagoda; Fickers and Tatarinov; Schmidt). One of the most exciting computational tools is MapReader developed by Hosseini, Wilson, Beelen, and McDonough using computer vision to analyze maps at scale, offering an exciting new avenue for spatial history. Expanding the network, we also see exciting work in areas such as theatre studies with work like Miguel Escobar Varela’s Theatre as Data and Visualizing Lost Theatres by Tompkins, Holledge, Bollen, and Xia. They are pushing the boundaries of what can be seen through technology by taking the Bard’s words to heart that “all the world’s a stage.” We join the work in this field by expanding on how and in what ways AI is opening interpretative space that centers a central axiom of the humanities: context. Always equating formal viewing with formalism forecloses rather than makes space to theorize and contextualize through computational methods in the ways that animate the humanities. Unpacking how visual AI makes meaning, informed by theories like distant viewing, offers a constructive path forward.

Acknowledgements

The authors would like to thank the peer reviewer for the critical reflection and thoughtful engagement. Thank you for the generous feedback and supportive directions that significantly improved the article.

Associated algorithms and more information can be found at Arnold, Taylor, and Lauren Tilton, “Distant Viewing Toolkit for the Analysis of Visual Culture,” GitHub, Version 1.0.1, released on February 9, 2023, https://github.com/distant-viewing/dvt.

Smits and Wevers have both extended this work into new studies of historical photography at scale. See Wevers, Vriend, and Bruin; Smits, Wevers.

Associated algorithms and more information can be found at Arnold, Taylor and Lauren Tilton.

Associated algorithms and more information can be found at the following data repository page, Arnold, Tilton, and Wigard, “Replication Data For.”